Betting on the Future: Introducing AI-Powered Prototyping

GenAI design tools like Lovable, Replit, Bolt, and v0 launched in 2024, the hype was deafening. "Build apps in seconds!" "No code required!" The promise was seductive: What if designers (or anyone for that matter!) could prototype at the speed of thought?

I'm congenitally skeptical of hype. But I'm also a compulsive early adopter who can't resist testing new tools. So when these platforms emerged, I immediately started trialing them…not to chase trends, but to see if they could actually solve real problems we faced at Cencora.

Role

I led the evaluation, business case development, and rollout strategy for Lovable and Figma Make. I directed my team through hands-on testing, presented findings to senior leadership, demoed the promise in 200+ person org-wide all hands, secured $60K in pilot funding, and began enterprise adoption before the effort was cut short by my layoff.

The problem

The innovation org my team supported needed to move fast at all times. We were developing new products and services, which meant validating concepts, testing demand, iterating based on feedback, pivoting often.

But our prototyping process had friction:

Coded prototypes were expensive and slow. Because of the nature and complexity of our business, we still often relied on coded prototypes to show strategic, multi-billion dollar customers. Building interactive prototypes required engineering resources. Even "quick" prototypes took weeks and months and cost was significant. This made it hard to test multiple concepts or iterate rapidly.

Static mockups weren't enough. Figma prototypes are great for demonstrating flows, but they still don't feel real and struggle to handle complex branching logic. Users couldn't interact with functional components. Higher fidelity tests can mean better, more precise feedback.

We couldn't test demand cheaply. I'm a big believer in guerrilla experimentation, such as placing fake ads, building landing pages, testing willingness to pay before committing resources. But even "fake door" tests often require development work.

The calculus was clear: If we could generate functional prototypes faster and cheaper, we could run more experiments, validate more ideas, and fail faster on the ones that wouldn't work. The question was whether AI tools could actually deliver on that promise—or if they were just expensive toys.

Approach: test everything, trust nothing

When these AI tools launched, I didn't write a business case. I didn't pitch leadership. I just started using them. I'm a strong believer in learning by doing. The fastest way to evaluate a tool is to actually try to build something with it. So I did. Several things, in fact.

Trial phase: Hands-on evaluation

I personally tested all the leading platforms, building simple prototypes to understand their strengths and limitations:

How intuitive was the prompting?

How good was the generated code? (to the the best of my ability)

Could it handle real design system components?

How much manual cleanup was required?

What could it do that Figma couldn't?

Pretty quickly, Lovable emerged as the best fit. (At this point in time Figma Make hadn’t yet released.) It was more user-friendly than competitors and could generate surprisingly sophisticated interactions from natural language prompts.

But I wasn't going to bet the company's money on my opinion alone. I asked one of my reports (essentially our resident creative technologist) to independently validate my findings. He reached the same conclusion: Lovable was the strongest option for our use case.

Right as we were deep in evaluation, Figma announced their AI design-to-code tool, "Figma Make." We tested it immediately. Initial verdict? Promising but not quite there yet. But it had one massive advantage: We already had an enterprise relationship with Figma. Their AI had been vetted by our risk management team. The procurement path was clear. This would matter more than I expected.

Building real prototypes to prove the concept

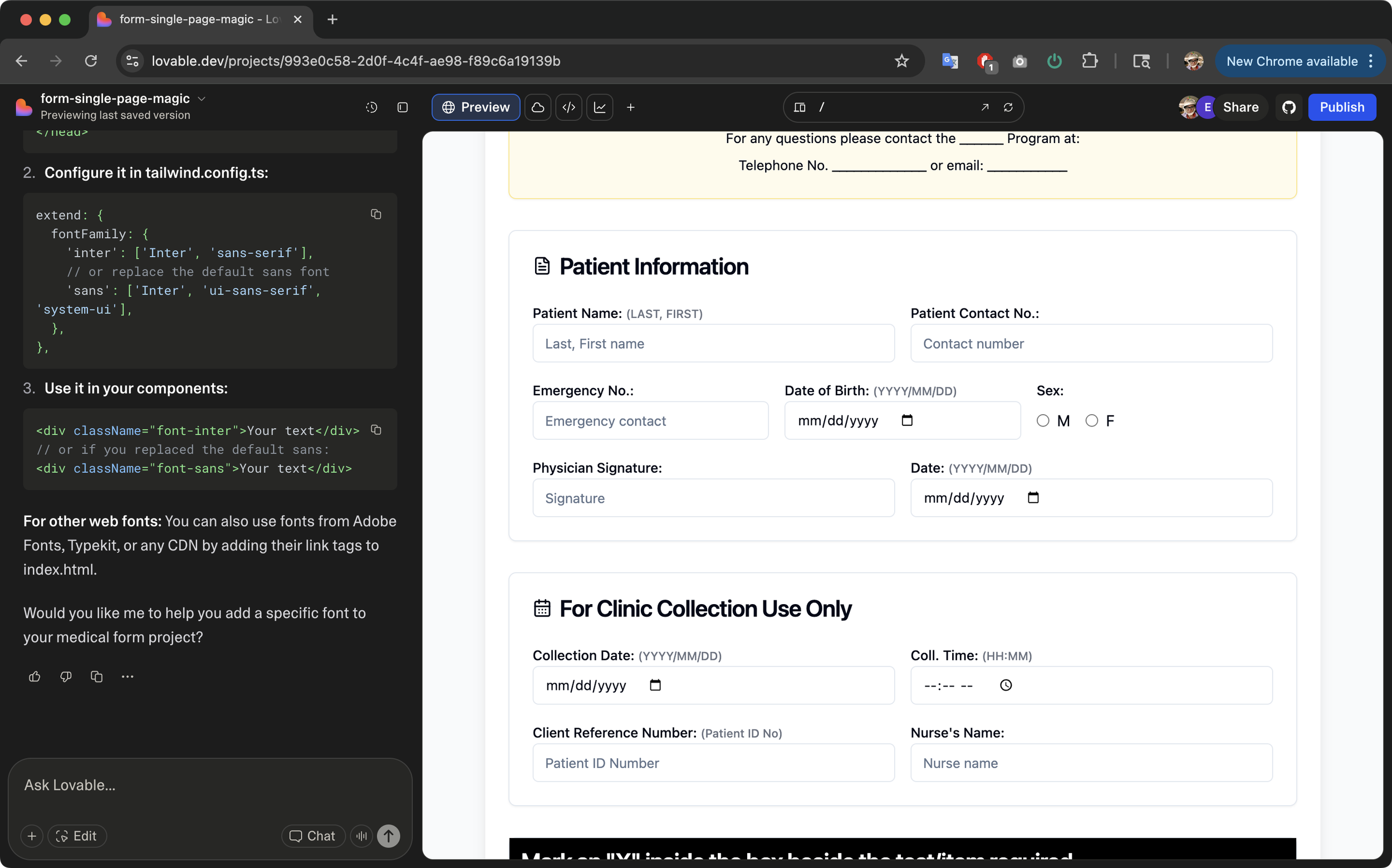

Rather than just presenting theory, I wanted concrete examples. We built several prototypes using both Lovable and Figma Make:

New product concepts:

Essential Med Reserve dashboard (emergency medication management platform)

Radiopharmaceutical therapy coordination platform

Internal contract management tools

Recreating existing designs:

Copied Figma mockups and turned them into functional prototypes

Rebuilt previous prototypes to compare speed and cost

The goal wasn't perfection, it was proof of concept. Could these tools actually replace coded prototypes for early-stage validation? Could they save us real money? We concluded the answer was “yes” but with caveats.

Roadshow

Once we had enough evidence that we were headed down the right path, I wanted to start generating excitement and interest internally. I showed off what we’d been tinkering with in some very large venues.

One of which was our global marketing summit. I’ve given talks to large crowds before, so this was nothing out of the ordinary. Myself along with some fellow design leaders gave a talk on where the design industry was headed with GenAI tools. It was equal parts "here's what's coming, and why it matters" and "here's what we're doing about it." The goal was to plant seeds and build awareness. I walked through:

What these tools could do

Limitations and where human expertise was still essential

Cost-benefit analysis (potentially $100K-$150K savings per new product)

Time savings (2 to 4 months reduction in prototyping time)

Proposed pilot structure

The reaction was extremely positive. People got excited. Leaders started asking "When can we use this?" As a result of these internal pitches, I was able to secure a $60K commitment for enterprise Lovable licenses and began recruiting 5-7 pilot projects to test the tool at scale.

Risk management vs. innovation speed

This is where the story gets frustrating. Cencora's risk management team is, to put it diplomatically, extremely risk-averse. This isn't unusual for a Fortune 10 pharmaceutical distributor. Patient safety, regulatory compliance, and data security are genuinely critical.

But it also means that adopting any new AI tool requires months of security reviews, vendor assessments, and compliance approvals. Lovable was blocked. Despite the business case, despite leadership support, despite $60K in committed budget, our risk team wouldn't approve the tool. New vendor, unproven security posture, generative AI concerns, etc. was a bridge too far.

Figma Make was approved, however. Since we already had an enterprise Figma relationship, Make slid through with minimal friction. Their AI had been pre-vetted. The procurement path existed. So we pivoted. Figma Make as user-friendly as Lovable yet, but it was good enough, it was improving quickly, and had some advantages over Lovable like being able to generate Figma files from prompts. More importantly, we could actually use it.

Rolling out the pilot

With approval secured, I started advertising the capability across the company, recruited more product teams for an extended pilot, planned to evaluate efficacy across different use cases, intended to measure time savings, cost savings, and quality outcomes.

The goal was to push the tool hard and find its limits, document what worked and what didn't, and build a playbook for broader adoption. Multiple teams signed up. We were ready to scale it felt like.

What I learned

Introducing AI tools into a Fortune 10 company taught me more about organizational dynamics than about the technology itself.

Show, don't tell. Nobody cares about whitepapers or ROI projections. Building actual prototypes did more to convince stakeholders than any deck ever could.

Existing vendor relationships trump tool quality. Lovable was arguably a bit better than Figma Make at the time. But Figma was already approved. In large enterprises, "good enough and approved" often beats "excellent but blocked."

You need champions who can use the tools. I didn't just pitch the idea—I learned the tools myself and brought along a technical team member to validate findings. Leaders trusted we weren't chasing shiny objects.

Work with procurement, not against it. Risk management will always be cautious in healthcare. The trick is finding paths of least resistance. Consider prioritizing vendors you already work with.

The pilot was designed to answer critical questions: Which products benefit most? Where do tools break down? How much can we actually save? We had $60K allocated, teams lined up, and leadership support.

Then I got laid off.

But, these tools are only going to improve and will play a huge part of what the design industry looks like over the next decade. I can’t wait to jump back in and keep experimenting.

What I'm most proud of is identifying useful AI tools before they became mainstream, building concrete proof points, securing real budget, and establishing a framework that could survive beyond me. Whether it actually will? That's someone else's story now.